Generative AI for the analysis of sensitive enterprise data

How companies can benefit from Generative AI

ChatGPT has made Artificial Intelligence popular within a very short time. Anyone can quickly and easily set up an account at OpenAI and get their own impression of the impressive functionalities and capabilities of the Generative Pretrained Transformers (GPT). The precision with which the majority of queries are answered is most impressive.

The question quickly arises as to how companies can benefit from the new AI technologies? After all, the fields of application of generative AI models in enterprises are manifold. These range from applications in the area of customer service and customer dialog, for example, in order to be reachable to its customers 24/7 by being able to answer customer queries automatically, to Speech2Text applications with integrated translation services, to ChatGPT-like query and analysis options for the company's internal data treasure trove. In the status quo, public AI services such as ChatGPT from OpenAI are yet difficult to imagine in daily enterprise use: on the one hand, this is due to the fact that ChatGPT models lack the domain-specific data of enterprises, and on the other hand, enterprises cannot and do not want to make their internal enterprise data available to a publicly available AI service for various reasons (e.g. data protection, regulation, competition). In this article, we would like to highlight the ways in which Generative AI models can be used to analyze confidential enterprise data and the options available for implementation - on-premise or in private clouds.

Generative AI for the analysis of sensitive company data

The fields of application of generative AI models are manifold, which is primarily due to the flexibilities of using generative AI. Generative AI models can be used discriminatively in the sense of "Discriminative AI", e.g. by answering questions such as "Is the customer inquiry a complaint or a service request?". But above all to answer generative questions in the sense of classic "Generative AI" such as the dynamic extraction of topics from a customer query "Extract the top three keywords from the text and return only a list of keywords, use the following scheme: keyword 1, keyword 2, keyword 3". By integrating internal company data and domain-specific data, the precision and quality of LLMs will be significantly improved - a must-have for reliability in automatically generated answers. Furthermore, when running LLMs on-premise or in a private cloud, companies retain complete data sovereignty without being exposed to the risk of both data creators and data owners giving up control and thus the strategic value of their data resources.

On-Premise Operating Model

One way to use generative AI to analyze sensitive enterprise data is to implement the technology on-premise. In this approach, LLMs and associated infrastructure are operated within the enterprise network. This operating model provides organizations with maximum control over their data and ensures compliance with internal security policies and standards.

The benefits of an on-premise implementation are obvious:

- Strong data security: confidential enterprise data remains within the enterprise boundaries and is subject to the strict security policies of the internal network.

- High flexibility: The infrastructure can be extended or adapted as needed to meet the specific requirements of the enterprise (especially relevant for operators of critical infrastructures or enterprises in the special public interest).

- Lower latency: Since data analysis is performed on-site, latency is minimized, enabling faster processing and analysis.

Private cloud operating model

As an alternative to on-premise implementations, enterprises can operate Generative AI models for the analysis of sensitive company data in a private cloud. In this operating model, LLMs and required infrastructure are hosted in a dedicated cloud environment used exclusively by the enterprise. Private clouds offer similar benefits to on-premise deployments, but with additional flexibility and cost advantages, such as

- Better scalability: private clouds enable companies to scale resources as needed and adjust the necessary computing capacities according to requirements (e.g. on-demand use of expensive GPU capacities for model training).

- Cost efficiency: By using a private cloud, companies can save costs on purchasing and maintaining their own infrastructure.

Implementation of Generative AI

For using generative AI in an on-premise or private cloud environment, so-called enterprise AI platforms such as AltaSigma are recommended, which provide all relevant functionalities for the standardized development and efficient operation of generative AI models in one integrated toolbox, e.g.

- Flexible chat UI for entering prompts, visualisation of source references and confidence evaluation of sources,

- Model repositories for managing generative AI foundation models (available from Huggingface, among others),

- Tools for creating retrieval augmented generation pipelines (RAG) for querying structured and unstructured company data,

- Vector database for storing and updating embeddings,

- Data management functionalities to automate the preparation of relevant enterprise and domain-specific data,,

- Transfer learning capabilities to "inject" the company and domain-specific knowledge into AI foundation models,

- MLOps functionalities for deployment and operation of Generative AI models,

- REST APIs for seamless integration in 3rd party applications, and

- Sophisticated role and rights management to control access to LLMs as well as to underlying company data.

Enterprise AI platforms come with many functionalities and standards to operate domain-specific Generative AI models on-premise, with a similar precision and "look and feel" to that of commercial applications such as ChatGPT.

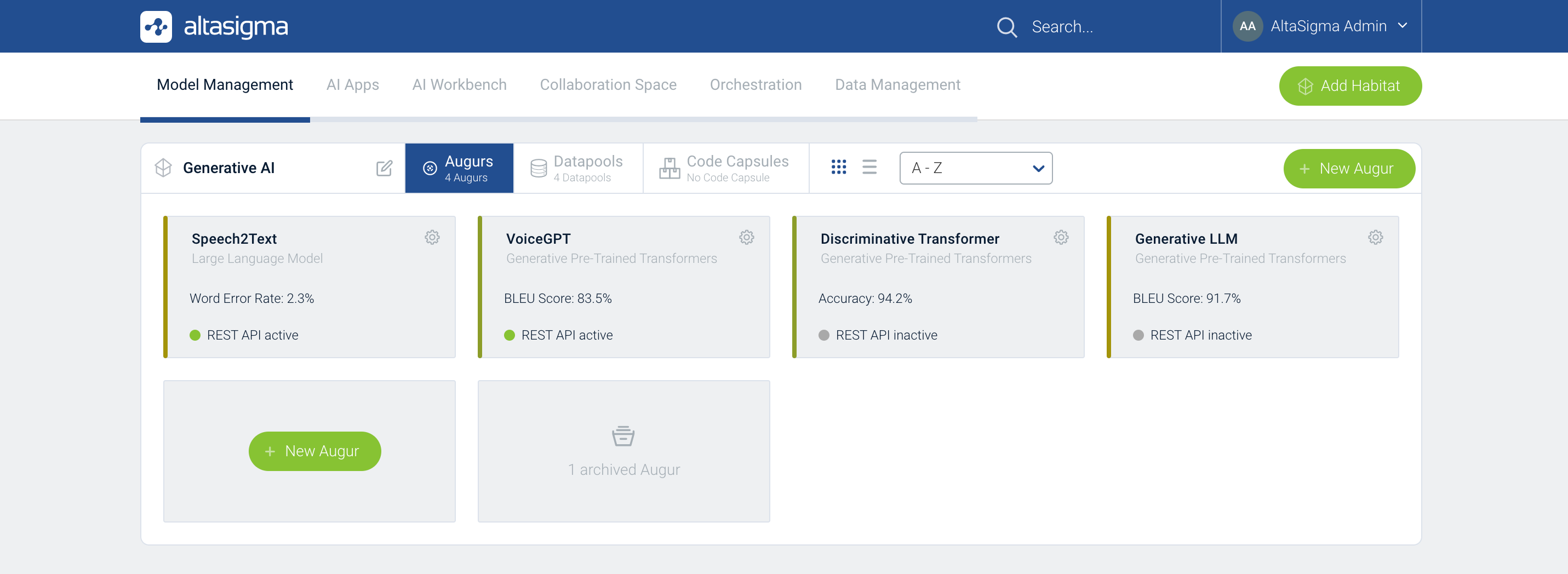

Management of Generative AI Models in AltaSigma

FAQs on Generative AI for the analysis of sensitive enterprise data.

How does Generative AI work when analyzing enterprise data?

Generative AI uses so-called deep learning algorithms to train models that can generate new data that resembles the original data. This generated data can then be used for downstream analysis to gain valuable insights.

What are the advantages of on-premise implementations compared to private clouds?

On-premise implementations offer organizations greater control over their data and enable tight integration with existing systems. In addition, specific security policies and standards can be better adhered to.

What are the benefits of a private cloud implementation?

Private cloud primarily offers enterprises flexibility in scaling resources as well as cost savings through on-demand use of expensive computing capacity (primarily GPU).

How can Generative AI contribute to the analysis of sensitive enterprise data?

Aligning Generative AI models with enterprise and domain-specific data enables organizations to significantly increase the precision and quality of Generative AI models. This can significantly improve the quality and reliability of automated decisions as well as the identification of trends and patterns.

What security measures should be taken when using Generative AI for sensitive enterprise data?

It is important to take adequate security measures to control access to sensitive data, apply encryption techniques, and implement strict access and authentication policies. Usually Enterprise AI platforms comply with these security requirements.

How to develop custom generative AI models for enterprise data analytics?

Developing customized generative AI models requires a fundamental understanding of the specific requirements of the enterprise and significant knowledge of machine learning. Enterprise AI platforms such as AltaSigma provide all the functionalities to facilitate the development and operation of AI models in a highly resource-efficient manner.

Conclusion

Leveraging Generative AI to process and analyze sensitive enterprise data enables enterprises to directly participate in current AI developments, with the advantage of gaining valuable insights based on their enterprise data and deriving better automated business decisions. Both on-premise implementations and private clouds offer secure and flexible options for implementing Generative AI, while ensuring data sovereignty. When selecting the appropriate operating model, organizations should consider their specific requirements, security policies, and integration with existing system environments. By properly leveraging Generative AI on their own data, organizations can improve their analytical maturity and significantly increase the quality of data analytics, which will have a positive impact on the current competitive landscape.